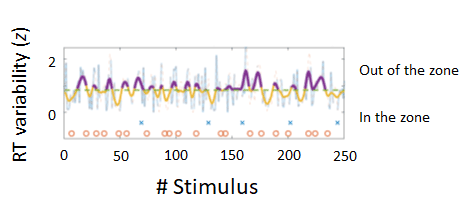

Fluctuation of Sustained Attention

Sustaining attention is critical for adaptive behaviors in everyday activities, such as reading or driving. It is an occupational skill in air traffic controllers and surgeons. However, focused attention can easily be lost through surrounding events (e.g., sudden ringtones) or fatigue. We found that levels of attention did not decline consistently over time, but rather fluctuated. Frequency of attentional fluctuations were similar timescale ranges (25 to 50 seconds) for each individual, regardless of visual or auditory tasks. This suggests that even if there are differences in input information, attentional fluctuations are influenced by the general biological rhythms of each individual. Using fMRI, we identified brain regions correlated with attentional fluctuations. The dorsal attention network was implicated in attentional fluctuations, but the default mode network was not. This suggests that brain regions related to attentional focus, rather than mind wandering, regulate the level of sustained attention.

References

Kondo, H. M., Terashima, H., Ezaki, T., Kochiyama, T., Kihara, K., & Kawahara, J. I. (2022). Dynamic transitions between brain states predict auditory attentional fluctuations. Frontiers in Neuroscience, 16, 816735. Invited Article

Terashima, H., Kihara, K., Kawahara, J. I., & Kondo, H. M. (2021). Common principles underlie the fluctuation of auditory and visual sustained attention. Quaterly Journal of Experimental Psychology, 74, 1140-1152.

Auditory and Visual Scene Analysis

Our brain is well equipped to transform a mixture of sensory inputs into distinguishable meaningful object representations. We perceive the world as stable, although auditory and visual inputs are often ambiguous due to spatial and temporal occluders. This photo illustrates many of the cues and factors that are involved in visual scene analysis: texture, contours, color, patterns of light and shade, continuity, and occlusion. The visual scene leads us astray into several interpretations, such as "islands in an ocean" or "a tiger carrying her cubs across the river". This raises important questions regarding where and how this “scene analysis” is performed in the brain. Recent advances from both auditory and visual research suggest that the brain is not simply processing the incoming scene properties but that top-down processes such as attention, expectations and prior knowledge facilitate scene perception. Thus, scene analysis is linked not only with the formation and selection of perceptual objects and stimulus properties, but also with selective attention, perceptual binding, and awareness. (Photo by Chie Miki)

Reference

Kondo, H. M., van Loon, A., Kawahara, J. I., & Moore, B. C. J. (2017). Auditory and visual scene analysis: an overview. Philosophical Transactions of the Royal Society B: Biological Sciences, 372, 20160099.

Integration and Segregation of Auditory Streams

Various sounds reach the ears together in time. However, one can follow a conversation that is acoustically intermingled with competing conversations, loud music, glasses tinkling, and so on. Humans and animals possess the sophisticated ability to integrate complex acoustic inputs into one organized percept and switch their attention among several auditory streams within inputs. The sequential integration and segregation of frequency components for perceptual organization, which called auditory streaming, is essential for auditory scene analysis. Prolonged listening to an unchanging triplet-tone sequence produces a series of illusory switches between a single coherent stream and two distinct streams. The predominant percept depends on the frequency difference between high and low tones. Our fMRI results demonstrated that the activity of the auditory thalamus occurred earlier during switching from non-predominant to predominant percepts, whereas that of the auditory cortex occurred earlier during switching from predominant to non-predominant percepts. This suggest that feed-forward and feedback processes in the thalamocortical loop are involved in the formation of percepts in auditory streaming.

References

Kondo, H. M., & Kashino, M. (2009). Involvement of the thalamocortical loop in the spontaneous switching of percepts in auditory streaming. Journal of Neuroscience, 29, 12695-12701.

Kondo, H. M., & Kashino, M. (2007). Neural mechanisms of auditory awareness underlying perceptual changes. NeuroImage, 36, 123-130.

Effects of Self-Motion on Auditory Scene Analysis

Many studies have been devoted to understanding the acoustic cues and, more recently, the neural mechanisms underlying auditory scene analysis. However, in all of these studies, a very odd cocktail party is considered: it is a cocktail party where the listener is unable to move his/her head. Therefore, very little is known about the interaction between sensory processes of auditory scene analysis and motor processes. Audio-motor processes related to head movements, which are known to help sound localization, should nevertheless be an important component of auditory scene analysis - both initially, as a listener actively explores a novel scene, and also in the ongoing maintenance of perceptual organization, as attention-grabbing sounds are likely to induce rapid head turns. Unfortunately, disentangling acoustic and motor cues is difficult because they are fully correlated for real movements. A virtual reality setup, the "TeleHead" system, was used to overcome this difficulty. We clarified effects of the following potential components on auditory streaming: changes in acoustic cues at the ears, changes in apparent source location, and changes in motor processes. The results demonstrated that the contribution of motor cues is lower than that of the two formers. (Photo by NTT CS Labs)

References

Kondo, H. M., Toshima, I., Pressnizer, D., & Kashino, M. (2014). Probing the time course of head-motion cues integration during auditory scene analysis. Frontiers in Neuroscience, 8, 170. Invited Article

Kondo, H. M., Pressnizer, D., Toshima, I., & Kashino, M. (2012). The effects of self-motion on auditory scene analysis. Proceedings of the National Academy of Sciences of the United States of America, 109, 6775-6780. Press Interest: ScienceNOW, WIRED, Popular Science, The Naked Scientists, Huffington Post

Separability and Commonality of Auditory and Visual Bistable Perception

Previous studies on scene analysis have been conducted independently in the auditory and visual domains. So it is difficult to obtain a bird’s-eye view of scene-analysis research. The present study used different forms of bistable perception: auditory streaming, verbal transformations, visual plaids, and reversible figures. We performed factor analyses on the number of perceptual switches in the tasks. A three-factor model provided a better fit to the data than the other possible models. These factors, namely, the “auditory”, “shape” and “motion” factors, were separable but correlated with each other. We compared the number of perceptual switches among genotype groups to identify the effects of neurotransmitter functions on the factors. We focused on polymorphisms of catechol-O-methyltransferase (COMT) Val158Met and serotonin 2A receptor (HTR2A) -1438G/A genes, which are involved in the modulation of dopamine and serotonin, respectively. The number of perceptual switches in auditory streaming and verbal transformations differed among COMT genotype groups, whereas that in reversible figures differed among HTR2A genotype groups. The results indicate that the “auditory” and “shape” factors reflect the functions of the dopamine and serotonin systems, respectively. Our findings suggest that the formation and selection of percepts involve neural processes in cortical and subcortical areas.

References

Kondo, H. M., Farkas, D., Denham, S. L., Asai, T., & Winkler, I. (2017). Auditory multistability: idiosyncratic perceptual switching patterns and neurotransmitter concentrations in the brain. Philosophical Transactions of the Royal Society B: Biological Sciences, 372, 20160110. Invited Article

Kondo, H. M., Kitagawa, N., Kitamura, M. S., Koizumi, A., Nomura, M., & Kashino, M. (2012). The separability and commonality of auditory and visual bistable perception. Cerebral Cortex, 22, 1915-1922.